How I scaled a legacy NodeJS application handling over 40k active Long-lived WebSocket connections

I had the opportunity of upgrading a legacy application and I enjoyed every step of the process. I refer to this as “opportunity” because I see these types of projects as a platform to showcase my skills, put what I know and have researched into action, and learn new things along the way.

Let’s first talk about the current state of the application, and the limitations and then we will talk about how I improved the overall application performance and responsiveness.

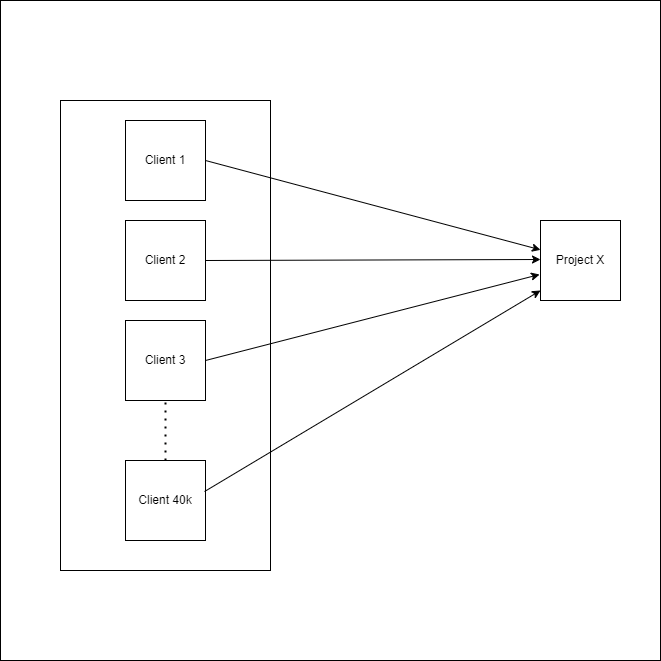

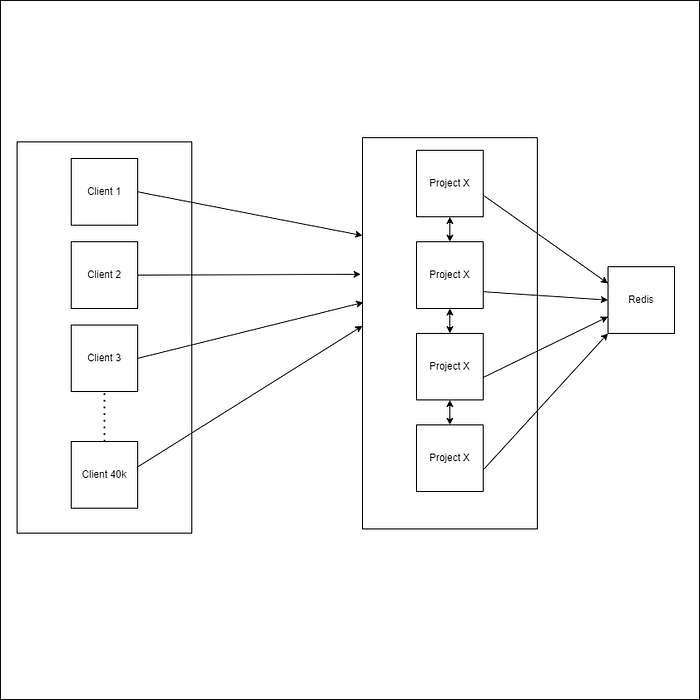

Project X, we’ll call it that for anonymity and simplicity’s sake, X was tasked with notifying multiple clients about the results of some other operations. When transactions are carried out on Service A, Service A calls Project X via Rest APIs, and then Project X would in turn notify the respective clients about this operation so that they can act accordingly, likewise Service B, C, etc.

When these supposed clients of Project X aren’t available (disconnected), Project X is tasked to store these outgoing notification requests in a queue so that when they are reconnected they can be sent again.

Project X is also designed to log the status of clients at every point when they are connected, disconnected, and reconnected.

Let’s talk about the nature of WebSocket connections a bit, so we can understand the limitations of Project X.

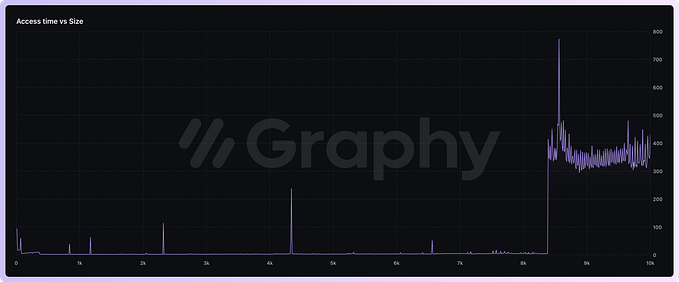

WebSocket connections are a powerful tool for enabling real-time, bidirectional communication between clients and servers. However, managing these connections at scale introduces significant complexity. Each WebSocket connection represents a persistent state that needs to be maintained for its duration, creating challenges around load balancing, fault tolerance, and synchronization across multiple application instances. Without a centralized distributed mechanism to manage connection state, ensuring messages are reliably routed to the correct client or instance becomes error-prone, especially when dealing with large-scale distributed systems.

When deploying multiple instances of the legacy Project X to handle WebSocket connections, several challenges can arise due to the distributed nature of the system. Each instance maintains its own WebSocket connections, leading to a fragmented state where no single instance has a complete picture of all active connections. This can result in issues such as:

- Message Routing Problems: If a client connects to one instance, but a message intended for that client is sent from another instance, it may fail to deliver unless the instances are properly coordinated.

- State Synchronization: Tracking the state of each connection — such as its identity, metadata, or subscriptions — across instances becomes challenging. A lack of synchronization can cause stale or inconsistent data when responding to client requests.

- Fault Tolerance: If an instance fails, all WebSocket connections managed by that instance are lost. Without a robust mechanism to redistribute these connections or preserve their state, clients may experience disconnections and degraded service.

- Scaling Complexity: Dynamically scaling the application by adding or removing instances complicates connection management. Instances need a way to share updates about new or dropped connections in real time.

- Resource Contention: Without efficient load balancing, some instances may become overloaded with WebSocket connections, leading to performance degradation or connection throttling.

These challenges make it essential to implement a centralized distributed mechanism, such as Redis, to act as a state store and message broker, enabling seamless coordination across instances.

These amongst many were the limitations of Project X, because we are talking about WebSockets, we would only focus scope on WebSockets.

Now that you have an idea about the limitations, let’s proceed to how they were handled and optimized.

The first approach was deciding to deploy multiple instances of Project X and configure sticky sessions, what this means is whilst we maintain the current state of Project X (not do anything about the current architecture), we could deploy multiple instances and ensure that every client maintains the same app instance anytime there’s a reconnection, this would mean we introduce a third party such as NginX on the network layer with sticky session configurations. Pairing this approach with RedisAdapter and Socket.io which helps to store sessions would make it better, but this would mean we completely rewrite the WebSocket implementation to fit “redisAdpater” emit functionalities and it ultimately doesn’t completely solve the issue.

Following from the first approach, clients behind proxies may have their IP addresses obscured, frequent reconnection from a subset of clients pointing to a particular app instance might cause overloading, and most importantly we wanted to sleep well at night, ensure some form of complete statelessness, add more instances on the fly and less worry about configurations or proxy issues.

The solution:

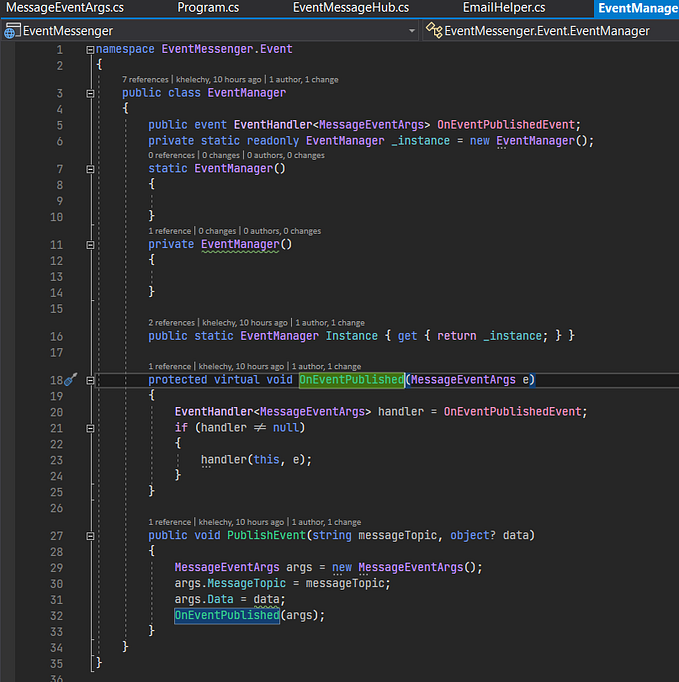

Leveraging only Redis, we achieved exactly what we wanted. Whilst every app instance managed its own set of long-lived WebSockets connections, we decided to broker across instances, track WebSockets connection states in a distributed storage, and handle WebSockets connection events accordingly.

- Brokering messages across instances

- Handling Websocket connection lifecycle and catering for reconnection on another instance (Gracefully)

Brokering messages across instances:

Here we introduce Redis Pub/Sub for cross-instance communication, we use this to notify other instances of Project X about the state of a connection, either for clean-up or to bubble up messages to a specific client. Assume we have two instances of Project X running, and for some reason, a client disconnects from one and reconnects to another, we want to be able to tell the former Parent server to clean up every reference it has to the client so that it can be re-registered as a client of the new Parent server:

const pub = new Redis();

const sub = new Redis();

sub.subscribe("ws-messages");

sub.on("message", async (channel, message) => {

const { clientId, data } = JSON.parse(message);

const client = Array.from(wss.clients).find(

(ws) => ws.clientId === clientId && ws.readyState === WebSocket.OPEN

);

if (client) {

client.send(JSON.stringify(data));

}

});Here we manage two connections to the Redis server for both pub and sub because having them on the same connection instance would cause the publish to not function properly as subscribe blocks other operations on the connection instance.

Subscribe receives messages from a channel (sent from other Project X instances) and sends out the message to the respective clients. Before doing so, it checks the availability of the client by existence and readiness to receive messages, It is best to split this check as one would tell if the client exists on that instance, so you can drop the message completely when they are not, and if the client is connected, so you can queue the message for later when they are not connected.

Keep in mind that you can also check the state of the client from the Redis storage, we will see this as we proceed.

Handling WebSocket Connection LifeCycle:

This is pretty much straightforward, we want to be able to capture the connection states of a client as they appear from the WebSocket events, the only tricky part is identifying if the client has changed servers due to reconnections (since we would be having multiple instances).

// Function to handle client registration in Redis

const registerClient = async (clientId, instanceId) => {

await redis.set(`ws:${clientId}`, instanceId, "EX", 3600); // Set a 1-hour expiration

};

const findClientInstance = async (clientId) => {

return await redis.get(`ws:${clientId}`);

};wss.on("connection", (ws) => {

ws.isAlive = true;

// Heartbeat for connection health

ws.on("pong", () => (ws.isAlive = true));

// Handle incoming messages

ws.on("message", async (message) => {

const payload = JSON.parse(message);

if (payload.clientId) {

const previousInstance = await findClientInstance(payload.clientId);

// Clean up previous instance

if (previousInstance && previousInstance !== process.env.INSTANCE_ID) {

pub.publish(

"ws-messages",

JSON.stringify({

clientId: payload.clientId,

data: { action: "disconnect" },

})

);

// Register clientId to this instance

ws.clientId = payload.clientId;

await registerClient(payload.clientId, process.env.INSTANCE_ID);

}

// Handle queued messages

const queuedMessage = getQueueMessages(); // Mock logic

if (queuedMessage) {

ws.send(queuedMessage); // Since its the connected client and instance no need to broadcast

}

}

// Cleanup on disconnect

ws.on("close", async () => {

if (ws.clientId) {

await redis.del(`ws:${ws.clientId}`); // remove client from distributed storage since it has disconnected (unregister)

}

});

});

// Periodic Health Check

const interval = setInterval(() => {

wss.clients.forEach((ws) => {

if (!ws.isAlive) {

return ws.terminate();

}

ws.isAlive = false;

ws.ping();

});

}, 5000);

wss.on("close", () => {

clearInterval(interval);

logger.info("WebSocket server closed");

});

process.on("SIGINT", () => {

logger.info("Shutting down server...");

wss.clients.forEach((client) => client.terminate());

server.close(() => {

logger.info("Server closed gracefully");

process.exit(0);

});

});We have written two methods that would store and get the “clientId” and the “instanceId”, introducing “instanceId” would help us identify what server the client WebSocket connection currently exists on or formerly existed on. InstanceId is added as an environment variable to Project X when deployed. Additionally, you can also implement direct routing during broker broadcast since you already know what instance a client sits on.

Handling reconnection to another instance, during an incoming message from the client (connection acknowledgment) we check if the current instance (stored on Redis) is the same as the connecting server, if they are different this means the client has changed server, which results in sending notification to the other instance to clean up the connection references and then re-register the client on the new instance.

Other parts of the implementation cater to connection disconnections which delete the client connection state and “instanceId” from the Redis storage, ready to be registered again, intervals for pinging connected clients (Keep Alive), and also gracefully cleaning up WebSocket connections resource when the server is shutdown.

Sending messages to clients:

Finally, sending messages to clients could be done depending on how creative or defensive you have implemented your client connection state check and brokerage between instances.

// Send message clients

const clientInstance = await findClientInstance(clientId);

if (clientInstance === process.env.INSTANCE_ID) {

const wsClient = Array.from(ws.clients).find((client) => client.clientId === clientId);

wsClient.send(message);

}else{

pub.publish(

"ws-messages",

JSON.stringify({

clientId: payload.clientId,

data: message,

})

);

}

We first check if the client exists on the current instance by fetching the “instanceId” saved on Redis and comparing it with the actioning Project X “instanceId”, if they are a match, we get the connection from the WebSocket clients and send a direct message since we can assume the actioning Project X instance is the Parent server to that client. If the “instanceIds” do not match, we publish the message across instances so that they can pick it up and the matched Parent server can send it to the respective client. See the “subscribe” implementation above.

Additionally, since we already know the “instanceId” of the client, we could send a direct message to the Project X instance via direct routing on the publish, this could be done by appending the “instanceId” on the channel during publish and subscription.

const pub = new Redis();

const sub = new Redis();

sub.subscribe("ws-messages-instance1");

sub.on("message", async (channel, message) => {

...

});

pub.publish(

"ws-messages-instance1",

JSON.stringify({

clientId: payload.clientId,

data: message,

})

);Summary:

We introduced a broker to communicate between instances, this way we could relay messages about the state of a WebSocket connection, send messages to other instances, and also notify direct messages to instances housing the clients. “InstanceIds” were useful for us to quickly identify where a client currently sits or previously sat, and also directly route messages to a particular instance. Redis for pub/sub since it’s fast and distributed, we also leveraged it as a distributed storage to store “clientIds” and their “instanceIds”, additionally, we can also leverage it to store the state of a client connection and availability.

I hope I have been able to give insights about scaling a stateful application managing WebSocket connections, avoiding the pitfalls, and better architect greenfield version.

Cheers :)